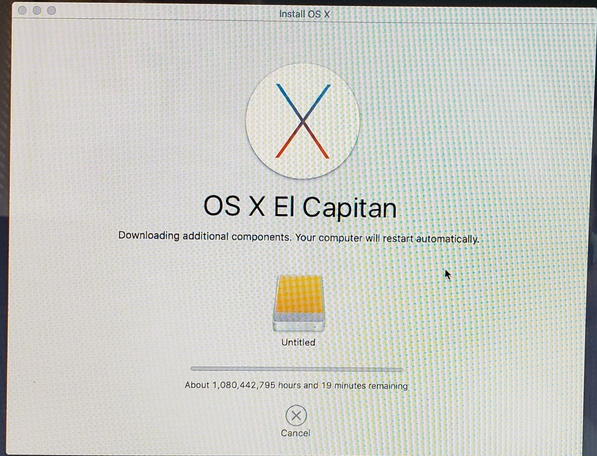

I got this message when I was rebuilding my father-in-law’s 2007 iMac. It happened at one point when I was trying to install on OS on the boot drive.

This might be the most realistic time-to-complete estimate I’ve ever seen in an Apple installer:

(I haven’t talked about that computer. I got it a couple of years ago and I’ve been meaning to upgrade it, just like I did with our own 2007 iMac. The only difference from that upgrade plan was I only used a 1 TB drive for the boot drive. The use case is to back up some computers at work, so I added a 4 TB external drive. I would have prefered making it internal, so I could use the SATA connection rather than a USB 2.0 external drive. But … sigh. Apple. There isn’t really room for another drive (yes, I know about replacing the Superdrive with in internal drive) but beyond that, getting at the drive, in case it fails and needs replacing is so incredibly hard, that I decided I could live with slow backups.)